Who's Afraid of Big Bad A.I.?

A.I. can do a lot of things well, but some things are best left to the humans, no matter how convenient it may seem.

Another day, another 30 or 40 articles/think pieces about the something new and wonderful (or new and terrifying) thing that an A.I. chatbot has done.

People can't stop talking about the things, and with good reason: They're impressive.

But I recently found myself in a work call discussing the applicability of ChatGPT to our team's work, and I felt myself firmly entrenched on the side of the curmudgeons. The luddites. The fogies behind the times. The doomsayers.

As someone guilty of loving technology products and always on the lookout for the next latest-and-greatest, I recoiled at this a bit. Why am I suddenly a hypocrite? Why don't I love ChatGPT and its brethren?

It's due to the nature of the things that I value. First and foremost is the written word. Because no matter how impressive it is (and will be) for ChatGPT to string together words that make sense to increasingly complicated (or nonsensical) prompts (and I assure you: I find it very impressive), it still pales in comparison to human writing.

What's more, I worry that it will lower the bar for all. It will appeal to the lowest common denominators of the written word. And on the internet, where things are already bleak for true practitioners of journalism, I worry that the masses will suddenly prefer the auto-generated. That they'll become accustomed to it, and worse yet that they'll forget to differentiate or value truly inspired writing, truly original writing, writing with a voice and character all its own.

I worry about that because it seems possible. It seems even probable. And as such, it puts jobs like mine (positions which roles are purely communication-oriented) in jeopardy. Maybe not tomorrow, maybe not next year, maybe not even five years from now.

But I don't want these types of jobs to be automated so I can be freed up to do other things; there are no "other things" in these roles. It's simply to share information. And that's still a noble thing to do with one's life.

The "free you up so you can do other stuff" is the same rationale that got an attorney into trouble recently. The New York Times reported a (honestly hilarious) story that shows where that time he saved honestly could have been better spent.

For those who want a sneak peak at the juicy details: ChatGPT, that lil' stinker, just made up case law out of whole cloth. Not just the names of the parties, but the citations, the courts they were supposedly issued from, the whole kit-and-caboodle that a lawyered would cite when doing his own research and finding relevant case law.

But, but, but....the lawyer said he asked the program if the cases were real.

From the Times story:

It had said yes.

Oh, well in that case, problem solved! And as a result, this lawyer could face potential sanctions for this.

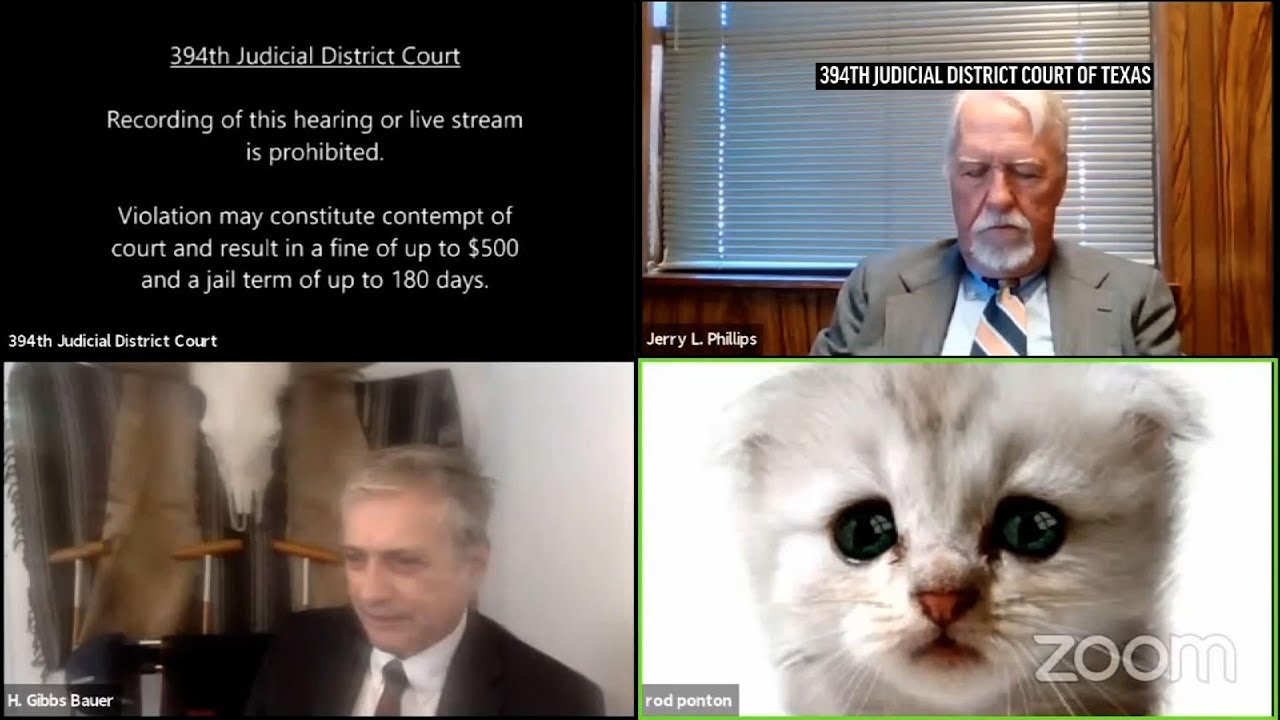

It seems like the attorney was just woefully ignorant of this new technology (not unlike our dear friend the Cat Attorney).

This isn't an isolated incident, mind you. Google's Bard chatbot got in on the action, too.

Warning to anyone contemplating the wonders of AI — the same thing happened when I asked Google’s Bard to explain how speed cameras don’t violate 6th Amendment right to confront your accusers.

— Steven Portnoy (@stevenportnoy) May 28, 2023

It spit out 3 US Court of Appeals cases that did not exist.https://t.co/9iJgNhfiVM

The Times story hit the nail on the head with what it is exactly that worries me, as a member of the "knowledge workers" class:

As artificial intelligence sweeps the online world, it has conjured dystopian visions of computers replacing not only human interaction, but also human labor. The fear has been especially intense for knowledge workers, many of whom worry that their daily activities may not be as rarefied as the world thinks — but for which the world pays billable hours.

This was inevitably an effort to save time, an understandable goal if ever there was one. It's not that different for pure creatives, namely writers of the all sorts of the content that we love.

"Oh, the first draft can't hurt. Just let it spit something out, and I'll go in and refine it."

It sounds like a reasonable starting point, but so much of the value of writing is in the human spitting out that first draft and then refining their own work (or having other humans — in the form of editors — do that polishing).

I'm not worried about the grand stakes of A.I.'s influence, although that's certainly not irrational either. Consider today's episode of the Times podcast, The Daily, which interviewed the "godfather of A.I." who's mightily concerned about doomsday dystopias.

My stakes and my concerns (for now) are more limited. For all the advancements A.I. might bring us, for all the time it might save, my core belief is a simple one: The human mind is a terrible thing to waste.